Learning by doing

I've always been an advocate that errors and fails make the humans wise. The more you try and research, the more you will learn. What are you waiting for?

[PC & Playstation 4] C++ Graphics Engine

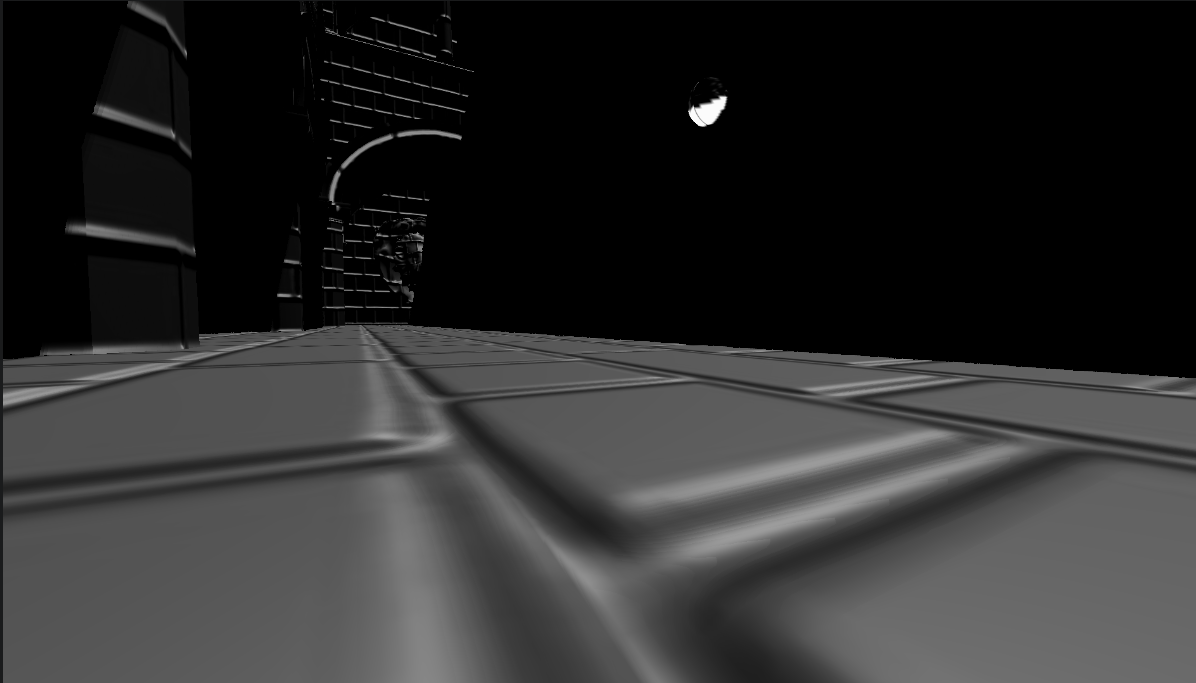

PC FOOTAGE

Graphics Programming

The engine was developed by Ivan Ivanov Mandev and me during our last year of an HND in Computing, for the Engine Programming subject. (8 months. October 2020 - May 2021)

It was initially conceptualized for PC, using OpenGL as its rendering API, but later our College offered us the posibility of working with a Playstation 4 development kit so we decided to port the engine to this platform. Metro Engine's features like Physically Based Rendering, ImGui's implementation and Design or LUA implementation (and so on) are not listed above because, although Ivan and I helped each other with everything, we divided the work. Note: Imaged Based Lighting is the only feature that IS NOT IMPLEMENTED in Metro Engine's Playstation Version, aside that, they are identical. Once the project ended I started to research Global Illumination myself, until I found Morgan McGuire's blog about Dynamic Diffuse Global Illumination (DDGI) and his talk at GDC 2019. I fell in love with this technique and started working on getting it working on Metro Engine's PC version. See DDGI section for more!

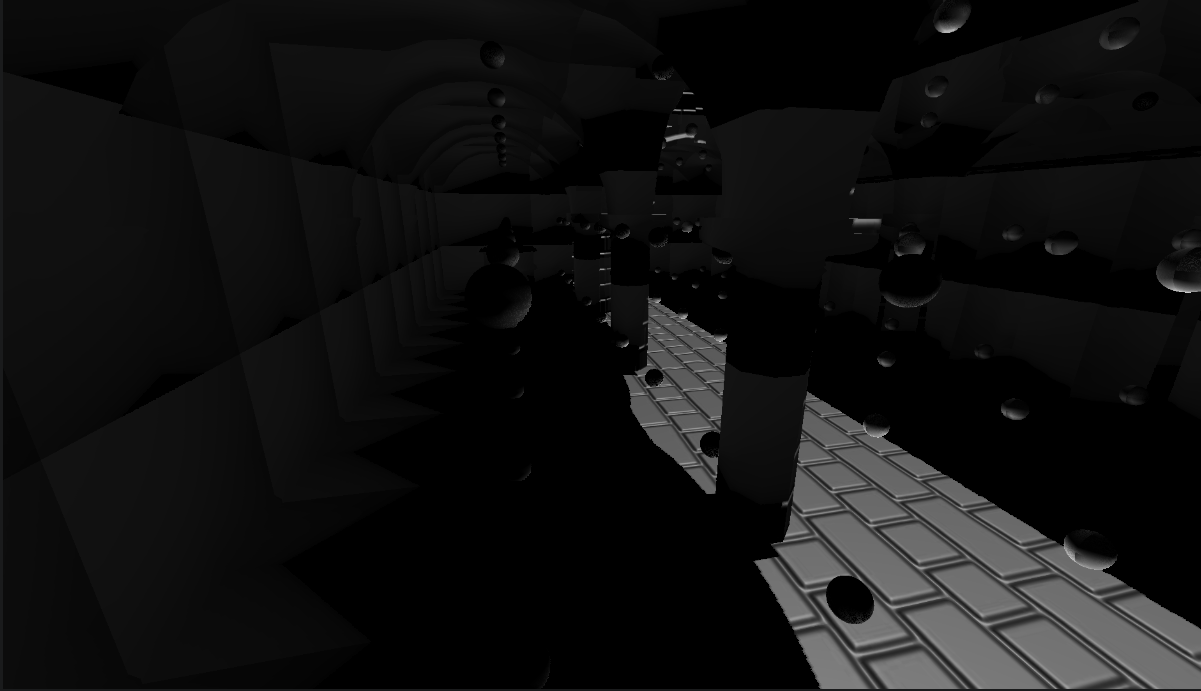

Deferred Rendering is used to avoid calculate the lighting of the scene per object and process it per pixel on the screen. For that we must store first all the information in different textures (usually called G-Buffers) and then take those textures to calculate the light influence in the scene. Here's a Playstation 4 footage of our Engine showing exactly that (the scene lightened, normals of all objects in the scene).

PLAYSTATION 4 FOOTAGES

This technique is another aproximation to achieve Real Time Global Illumination, and its closely related (and based) to

Real-time Global Illumination using Irradiance Probes

.

In our standard Deferred Rendering light pass we receive textures with world position and world normal scene

info (just as example) and with that and a PBR algorithm we can calculate the direct lighting of the scene, which is

perfectly sustainable at runtime (no heavy calculations). But if we had to calculate light bounces there... real time

would only be a dream. The answer here is receiving another texture with the scene indirect lighting already

calculated and add it to the direct lighting output we would have in a normal scenario. I know your next question: Where does

that indirect lighting come from? From a texture atlas containing the radiance info of the scene probes stored in static memory.

Until know this is more or less the same as the Real-time Global Illumination using Irradiance Probes technique but DDGI is

different: to avoid light leaks (common in the mentioned approach) McGuire explains a Visibility factor that uses when

adding the indirect lighting in the standard deferred light pass to know if there is a wall or something between the selected

probe and the point we are calculating light on. Also DDGI uses hardware accelerated Ray-Tracing (that can run without RTX if

we develop a BVH traversal algorithm in GPU).

That atlas with all the indirect lighting info of the scene that we need to enlighten it is updated when GPU ends its calculations,

and that is the very concrete point of why this technique is extremmely powerful, we do NOT have to wait for the indirect lighting info to

be processed, we just have to read the atlas and when the next update of the info is ready the atlas will be updated. This is a completely

asynchronous approach and as we can choose how often indirect lightning is re-calculated this technique is also potentially adaptable to

hardware limitations.

From now on I will be talking of how I implemented this technique in our Engine, that uses OpenGL as the rendering backend.

As it is my first iteration on this technique and my first implementation of a truely advanced illumination technique

all comments and advices are received with a big smile to continue learning! :)

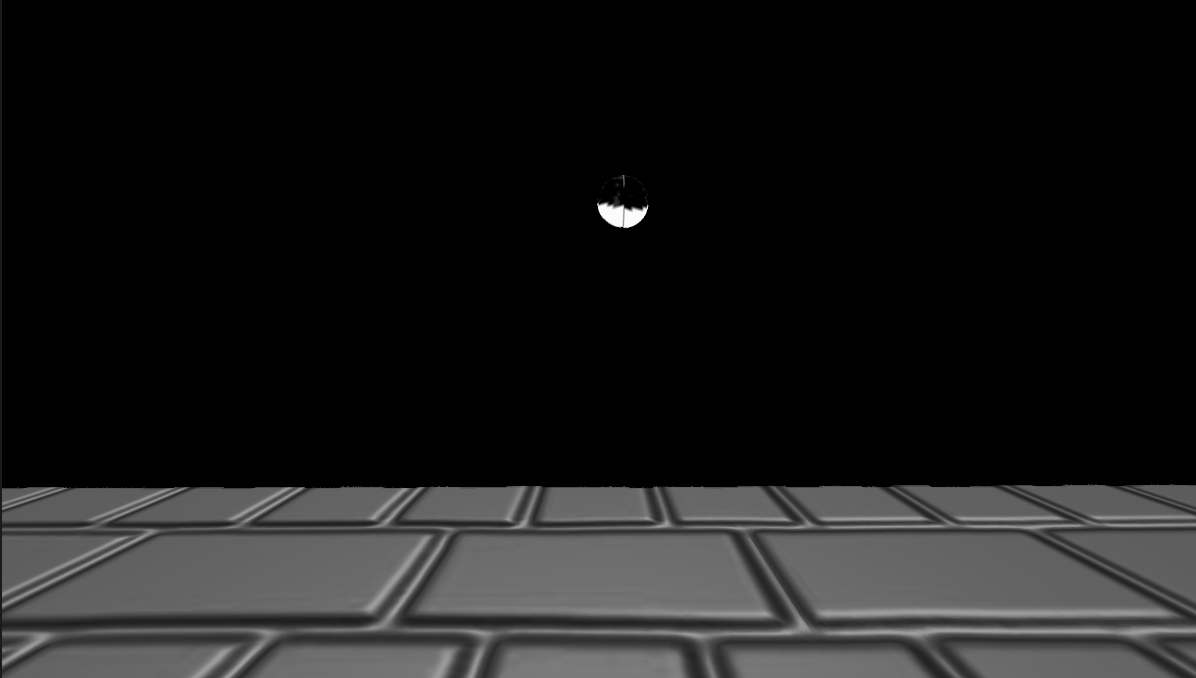

First of all we need to place points in the world that will be named probes. For debugging purposes I recommend to implement

instanced rendering in your environment and draw spheres on those points (this way we know what is happening all the time).

Also using a well known OBJ like Crytek Sponza helps (we can see examples of how this model look everywhere so comparing

with our results will easily reveal if there are artifacts).

Once I had my probes y created a compute shader and passed to it the array of positions in a Uniform Block (UBO).

Then, I asked myself: how can I obtain a 360 degrees photo of the scene? (because that's what we need for each probe).

Making a cubemap of each probe seems crazy to me, even doing it in a single Draw Call with Geometry Shaders, so I followed

McGuire's advices and went for GPU accelerated Ray Tracing. I needed all the scene objects' info in the shader to ray trace the scene

so I built the BVHs of the scene objects easily using NanoRT,(I personally recommend this

library, it's lightweight and single-header).

For those who are not used to work with Ray Tracing, Bound Volume Hierarchies (BVH) are used to divide the space in an optimal way. If we have two objects in the scene, with a normal

Ray Tracing approach we would need to check each of both models triangles (that can be literally millions of tris) but with a BVH divided scene

we throw a ray and check if we hit the cubes that contain the object; if we did not, then we have saved a million traces.

See

these books written by Peter Shirley, we explains this in-depth and better than me.

After having all the information ready, I sent it to GPU using Shader Storage Buffer Objects (SSBOs) and then implemented a BVH ray traversal

approach based on NanoRT's way of doing it.

The first I obtained at trying to draw surface normals successfully (with some artifacts) was this:

The next step was fixing the previous mentioned artifacts and trace omnidirectionally from a probe.

To light all the probes together with a standard Deferred Shading shader I needed to get surface

normals and world positions.

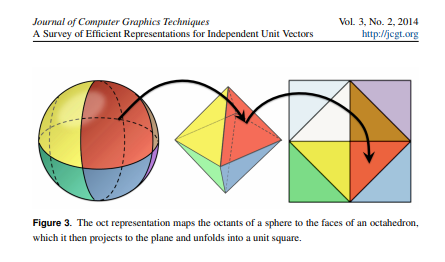

We need to trace a Ray in a direction, get the hit data, and save it in a certain Texture Coordinate but how can we do that conversion from 3 dimensional vector (direction) to a 2 dimensional one (UV)? This link exposes and approach.

This probe info compression consists in projecting the sphere to an octahedron, and then take its faces and unwrap them into a single plane. This image illustrates the process:

Here are some examples of the results that I get tracing omnidirectionally from a single probe:

World Position of Ray hits.

World Normals of Ray hits.

Enlightened texture using previous info (Position and Normal) of Ray hits.

Probe light info with a manual bilinear sampling applied (and a forced border for hardware bilinear sampling purposes).

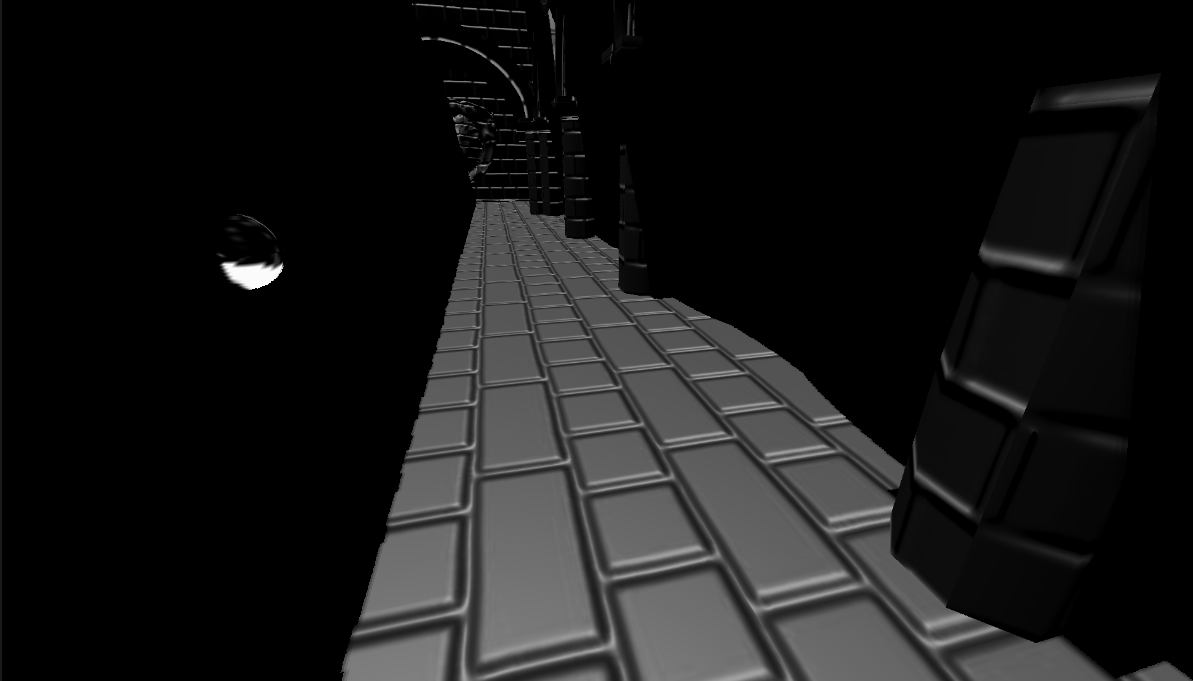

Here is the probe situation from which the previous shown info was gathered:

Perspective 0.

Perspective 1.

Perspective 2.

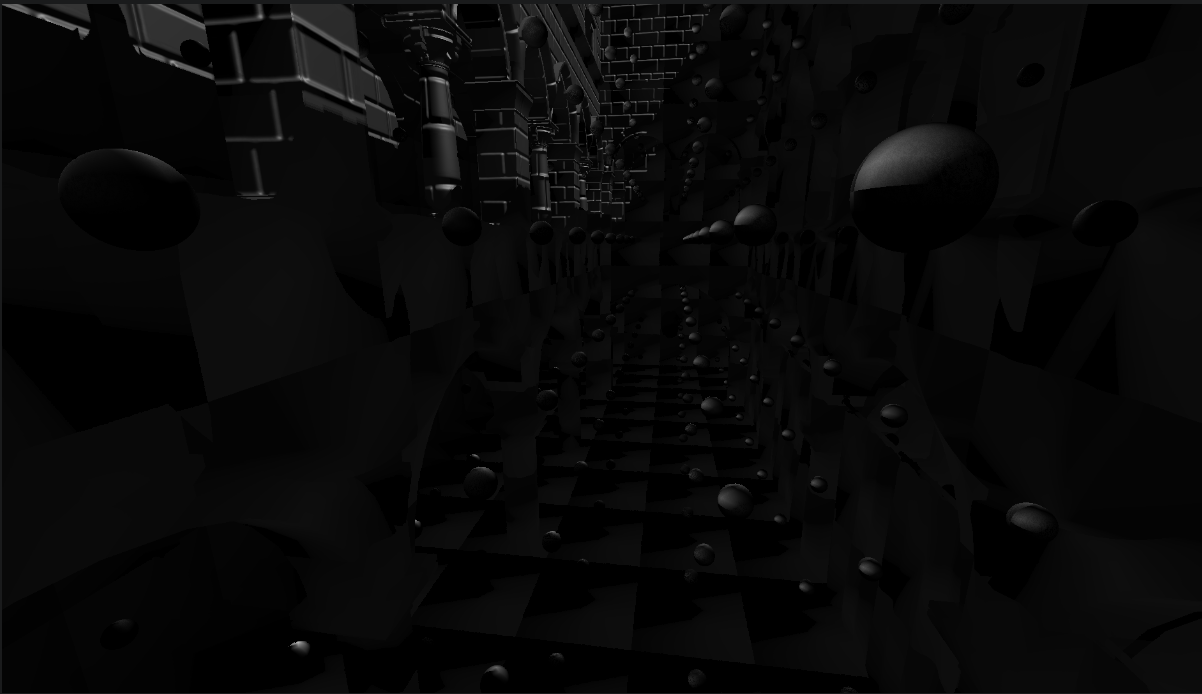

After all this, I started placing thousands of probes in the world and the problem came when trying to blend their info to enlight a fragment. The data was not arriving fragments correctly, and I think is something with the way I compress data into the textures (although I have been trying LOTS of fixes and it is still not working). The results I got were these (I decided to stop the development here for reasons that I will explain later):

Indirect Lightning of the first bounce in my Global illumination approach. Perspective 0.

Indirect Lightning of the first bounce in my Global illumination approach. Perspective 1.

As you see, there is indirect lightning but there are some artifacts (mainly black zones) when blending from the 8 closest probes.

The answer is no.

I was forced to stop developing Metro's Global Illumination for many reasons:

- The first is that I could not make this engine open source due to my College terms, and I wanted to do this for everyone to learn.

- The second one is that I had like 4 more College projects that where parallel to this one, and I concluded that with these results I was already happy.

I had researched and developed for an entire month while working on the rest of projects so now it was time to leave this one a bit aside to end the rest

as advanced as Metro.

- The last but not the least was a limitation of OpenGL. I have not managed to do asynchronous computations on the GPU, the Computing of the probes blocks the OpenGL context

and so the rendering. This causes this technique to be useless for real-time simulations (it is designed for that and based on async computations so... bad news).

But not everything is bad! Now that I ended College and all the projects are succssfully ended I am starting a Vulkan-based engine (API that does allow to do async compute on the GPU). As it is usually said,

when a door closes a window is ready to be developed!

Thanks for reading my post about Metro Engine and DDGI, I hope I motivated or helped you somehow in your development!

Check my twitter out for news!